Overview

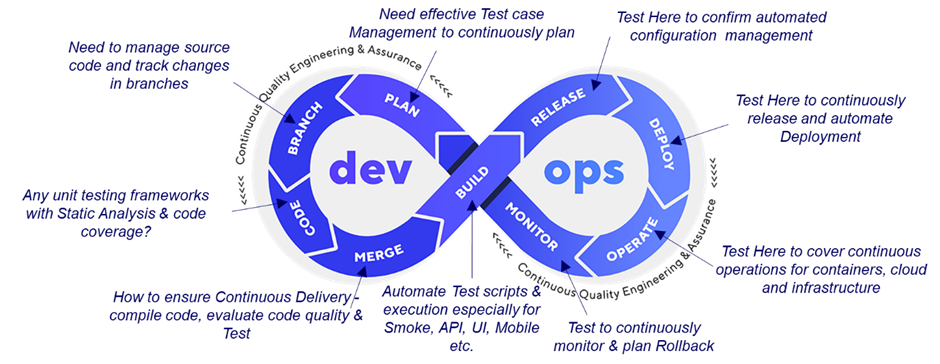

Continuous testing is a modern technique that depends on actionable feedback at each stage of the software delivery cycle. The main goal of Continuous Testing is to test early at all phases of the SDLC with automation, test as often as possible, and get faster feedback on the builds.

Continuous testing executes automated tests as part of the software delivery pipeline to obtain immediate feedback on the business risks associated with a software release candidate, thus gaining actionable feedback at each stage of SDLC.

Key Principles of Continuous Testing

Test as early as possible: Testing as early as possible is one of the key principles of continuous testing. By testing early and often, you can identify issues and defects early in the development process, which can help reduce the cost and time required to fix them.

Test automation and in-sprint automation: Test automation is the key enabler for continuous testing. It is recommended to automate the tests in-sprint so that all the regression testing can be run automated along with the automated pipeline.

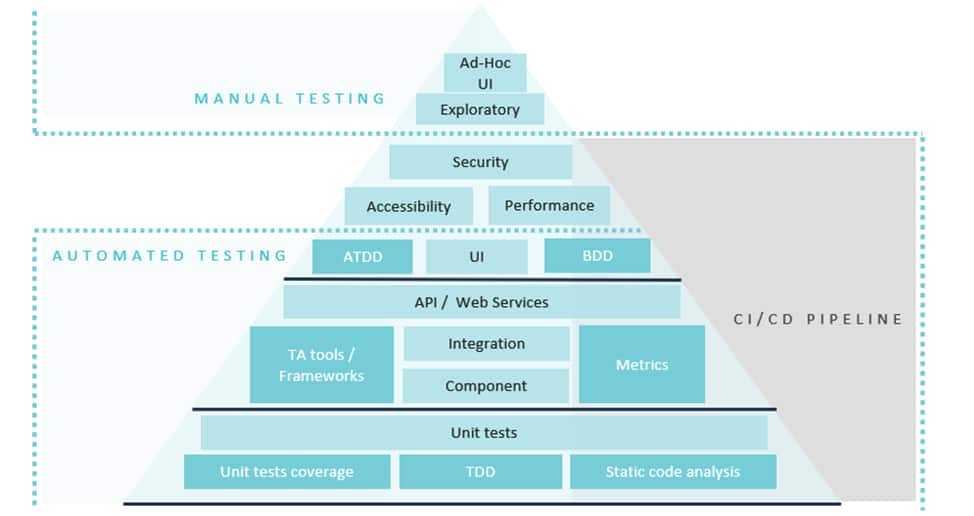

Balanced test pyramid: The testing pyramid is a model that breaks automated tests into three main categories: unit, integration, and UI (or e2e tests). This allows the development of a more efficient test suite and helps developers and QA specialists achieve higher quality. The pyramid metaphor tells us to group software tests into buckets of different granularity.

The bottom layer of the pyramid consists of unit tests, which are small, fast, and focused on testing individual functions or methods.

The middle layer consists of integration tests, which test how different system parts work together.

The top layer consists of UI (or e2e) tests, which test how the system works from a user’s perspective.

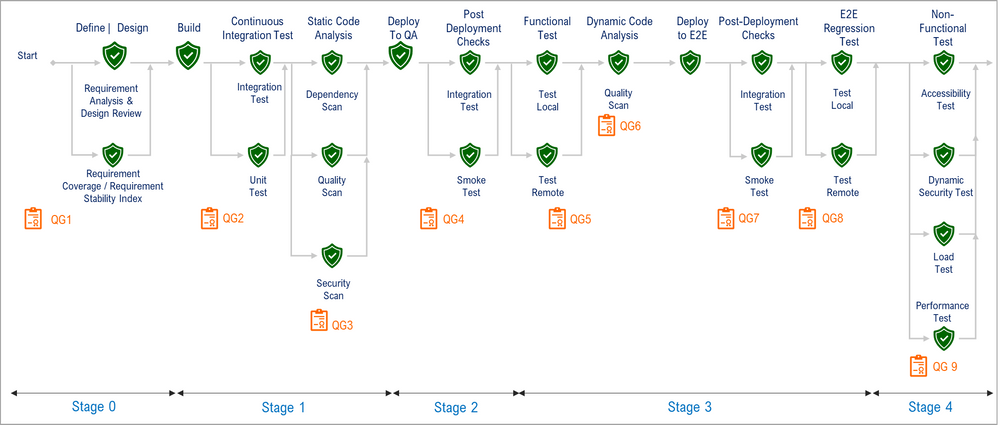

Automated Pipelines with Quality Gates: Automated pipelines with Quality Gates are an important part of continuous testing. Quality gates are checkpoints that ensure that code meets specific quality standards before proceeding to the next stage of the pipeline. Automated quality gates can help ensure that code is tested thoroughly and meets certain performance and architecture metrics.

Quality Gates in Continuous Testing

Quality Gates can be implemented at different pipeline stages, including unit testing, component testing, integration testing, and end-to-end testing. By implementing quality gates at each stage of the pipeline, you can ensure that the code is tested thoroughly and meets specific quality standards before proceeding to the next stage.

Continuous Testing Best Practices

The Continuous testing approach recommends various best practices listed below:

Dev Practices

- Code Quality - Static code analysis: To find code defects by examining the code without executing the program. Static code analysis efficiently identifies defects like concurrency, data flow, dynamic memory, and numerical defects. Tools used are SonarQube, CAST, Veracode, etc. Ref. Code Quality Testing.

- Code Reviews: During continuous Code Reviews, the team commits to processing proposed commits (to trunk) from teammates’ trunk speedily. It allows the developers to package up commits for code review and quickly get that in front of peers. Ref. Code Review.

- Version Control: Version control systems (source control, source code management systems, or revision control systems) are used for keeping multiple versions of the files so that when a file is modified, the previous revisions are still accessible. Ref. Version Control.

- Unit Testing: It tests a specific code section to ensure it does what is expected. The developers perform it during the development phase. A static code analysis, data flow analysis, code coverage, and other software verification processes can be applied at this stage. Ref. Unit Testing

Infrastructure

- Environment Provisioning: Scripting and automation tools enable automated environment provisioning, which delivers instances on-demand by automatically allocating and providing computing resources when needed for only the duration required. Ref. Test Environment Management.

- Automated Pipelines with Quality Gates: The automatic quality gates allow the pipeline to self-monitor the code quality delivered. It is also possible to place a manual verification step into a CI/CD pipeline to prevent accidental errors. Ref. Automated CI/CD Pipelines.

Process

- Engineering culture: Continuous testing requires an organizational culture shift. It’s a mindset that relies heavily on automation. Anything that can be automated should ensure faster release cycles, e.g., Automated Unit Tests, Automated Functional and Non-Functional Tests, Automated Regression Tests, and Automated Deployments. The following two culture changes will help transform an organization into continuous testing:

- Shared Responsibility: With continuous testing, the quality is built in from development to deployment. The whole team must take on testing initiatives. Developers are responsible for automating and integrating the unit tests with CI/CD pipeline. Testers focus on functional and non-functional testing and integrating those into the pipeline. Quality is built in through the pipeline from every step of the release cycle. This continues with deployments into productions, as operations can utilize all the tests that have been automated.

- Collaboration: Developers, Testers, and operations all should work together. While Developers create the APIs for their applications, testers can start automating those APIs by working with Developers. The Operations team works with the Developers and Testers to have the automated tests automatically integrated for build deployments. Thus, the Operations create a set of tests they can run at every build release, and testing occurs at every pipeline stage.

- Agile Practices: Continuous Testing is an essential part of Agile, and software is developed in small steps with regular feedback and testing. It helps the teams to make the software faster and better, with fewer mistakes.

Test Automation

- Test Pyramid: Refer to the Balanced Testing Pyramid above.

- Test Design Practices: Whether automated or manual, any software checks to validate the code must ensure they are fully compatible with a CI/CD system.

- Reliability: A test case must take every precaution to avoid a false negative or false positive. It must be repeatable without outside human intervention; it must clean up after itself.

- Importance: Prioritize the most critical test cases to run quickly. A typical unit test executes in microseconds and can generally be run in parallel; an integration test might get conducted in milliseconds and are several orders of magnitude slower than unit tests; a specialty test is significantly slower than an integration test or one that requires human intervention that slows down the overall reporting of results.

- Specificity: A good test case should individually do as little as possible to produce a pass/fail outcome as quickly as possible. Every test case should be a clear answer to a straightforward question, which adds up to a test suite that will explain the complete set of functionalities under test.

In-sprint automation:

- Participation & Collaboration: All QA team members should participate in the in-sprint automation initiative. This will help in collaborative decision-making for sprint testing objectives and faster results realization.

- Prioritize test design: Prioritize test design over the total number of tests as the total number of tests is not as pivotal to the overall quality perspective with complex, modular applications with multiple components. With a focus on superior test design, QA teams can ensure that the test requirements relevant to each test asset are adequately validated before the modules make their way into the final software.

- The right framework: Select a framework with essential characteristics but enough versatility and flexibility to customize based on the operational scenarios.

- Abstraction & Virtualization: Abstraction of application components is essential to achieving sprint automation. The abstract components can leverage virtualization to set up their runtime environments, facilitating parallel testing activities.

Shift-Left Testing:

- QA teams can leverage open-source frameworks for automation like Selenium to write automated tests that can be executed repeatedly and frequently on schedule, with the support of environment virtualization tools.

- The Dev and QA teams can communicate on these bugs through widespread knowledge-sharing platforms such as Confluence. The dev team can use static code analysis tools like SonarQube, Veracode, etc., to check their code based on established standards.

Production Testing and Monitoring:

- Canary Releases: Canary release is a technique to reduce the risk of introducing a new software version in production by slowly rolling out the change to a small subset of users before rolling it out to the entire infrastructure and making it available to everybody.

- Load Testing: Load testing simulates a real-world load on the system to see how it performs under stress. It helps identify bottlenecks and determine the maximum number of users or transactions the system can handle.

- Application Monitoring: It is the process of monitoring, in real-time, actual humans interacting with the application. It can be categorized into two main groups: Real User Monitoring (RUM) and synthetic monitoring.

- Other testing types that can be considered under Production Testing are Chaos Engineering, Automatic broken link checking, Regression testing, Visual regression testing, and Accessibility testing.

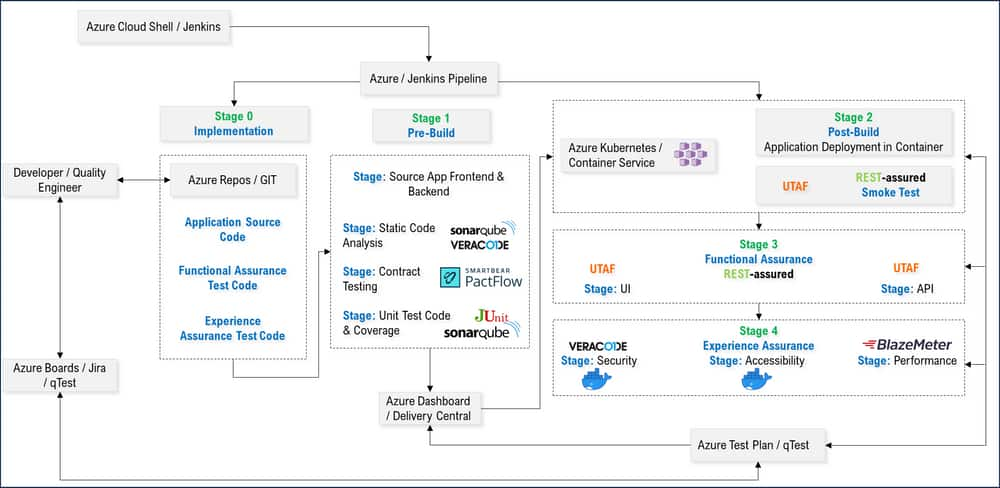

Continuous Testing in CTC

Stage 0 (Landing Zone):

Application Source Code

Functional Assurance Test Code

Experience Assurance Source Code

Stage 1 (Pre-Build):

Source App Front-end & back-end: Front-end testing validates that GUI elements of the software are functioning as expected / per the requirement. Back-end testing validates the database side of web applications or software.

Static Code Analysis / Code Quality Testing: Static analysis refers to reviewing the software source code without executing the code. This analysis is carried out by developers using tools such as SonarQube etc., to check the syntax of the code, coding standards, code optimization, etc. Developers perform this analysis at any point during the development or configuration phase in the project lifecycle.

Unit Test Code & Coverage: Unit testing is a software testing technique that focuses on verifying the correctness and functionality of individual code units. Each unit, typically a function, method, or class, is tested independently to ensure it behaves as expected and meets its intended functionality.

Stage 2 (Post-Build):

Smoke Test: Smoke Testing is performed to verify deployed build with minimal critical flows (High-level functionality) to ensure the application is stable enough for further development or testing and should not exceed 15 mins for execution.

Stage 3 (Functional Assurance):

UI Testing: UI testing is a testing type that helps testers ensure that all the fields, labels, buttons, and other items on the screen function as desired. It involves checking screens with controls, like toolbars, colours, fonts, sizes, icons, and others, and how they respond to user input.

API Testing: API testing is a type of software testing that involves testing application programming interfaces directly and as part of integration testing to determine if they meet expectations for functionality, reliability, performance, and security.

Stage 4 (Experience Assurance):

Security Testing: Security testing is a sort of software testing that identifies vulnerabilities, hazards, and dangers in a software program and guards against intruder assaults.

Accessibility Testing: Accessibility testing evaluates a product, service, or environment to determine how accessible it is to differently-abled people.

Performance Testing: Performance testing is a type of software testing that focuses on evaluating the performance and scalability of a system or application under its expected workload. It is a testing technique to determine system performance regarding sensitivity, reactivity, and stability under a particular workload.

Adoption expectations

System Components |

MVP |

MVP+ |

|---|---|---|

Static Code Analysis |

+ |

|

Code Reviews |

+ |

|

Version Control |

+ |

|

Unit Testing |

+ |

|

Environment Provisioning |

|

+ |

Automated pipelines with Smoke testing as Quality Gate at all Envt. |

+ |

|

Automated pipelines with Regular Quality gates at all Envt. |

|

+ |

Automated pipelines with Advanced Quality Gate at all Envt. |

|

+ |

Balanced Test Pyramid |

+ |

|

Test Design Practices |

+ |

|

In-sprint automation |

|

+ |

Tools

Functionality |

Tool Name |

|---|---|

Test automation and in-sprint automation |

Git, UTAF |

Version Control Collaboration |

Azure DevOps Repo, Bitbucket |

Build automation tools |

Jenkins, Bamboo, TeamCity, Azure DevOps |

Containerization and orchestration tools |

Docker, Kubernetes, OpenShift |

Test Automation |

JUnit, NUnit, Rest Assured, Selenium |

Roles

Name |

Responsibilities |

|---|---|

QE Architect |

|

Developer |

|

Build Engineer |

|

Test Automation Engineer (TAE) |

|

QA Specialist (Functional SME) |

|