Overview

To bring high-level description of the implementation of Canadian Tire Corporation’s software general performance testing strategy. The strategy includes general approaches to building performance testing infrastructure using loading and monitoring tools, and includes risks, metrics, and components to be tested.

Definition

Performance testing is a type of software testing that focuses on evaluating the performance and scalability of a system or application under its expected workload. It is a testing technique to determine system performance regarding sensitivity, reactivity, and stability under a particular workload.

Objectives

To eliminate performance congestion.

To uncover what is needed to be improved before the product is launched in the market.

To make the software rapid, stable, and reliable.

To evaluate the performance and scalability of a system or application under various loads and conditions. It helps identify bottlenecks, measure system performance, and ensure it can handle the expected number of users or transactions. It also helps to ensure that the system is reliable, stable and can handle the anticipated load of a production environment.

Types

Load testing:

- Load testing simulates a real-world load on the system to see how it performs under stress. It helps identify bottlenecks and determine the maximum number of users or transactions the system can handle.

Stress testing:

- Stress testing is a type of load testing that tests the system’s ability to handle a high load above a normal usage level. It helps identify the breaking point of the system and any potential issues that may occur under heavy load conditions.

Spike testing:

- Spike testing is a type of load testing that tests the system’s ability to handle sudden spikes in traffic. It helps identify any issues when the system suddenly hits with many requests.

Soak testing:

- Soak testing is a type of load testing that tests the system’s ability to handle a sustained load over a prolonged period. It helps identify any issues that may occur after prolonged system usage.

Endurance testing:

- This type of testing is like soak testing, but it focuses on the system's long-term behaviour under a constant load.

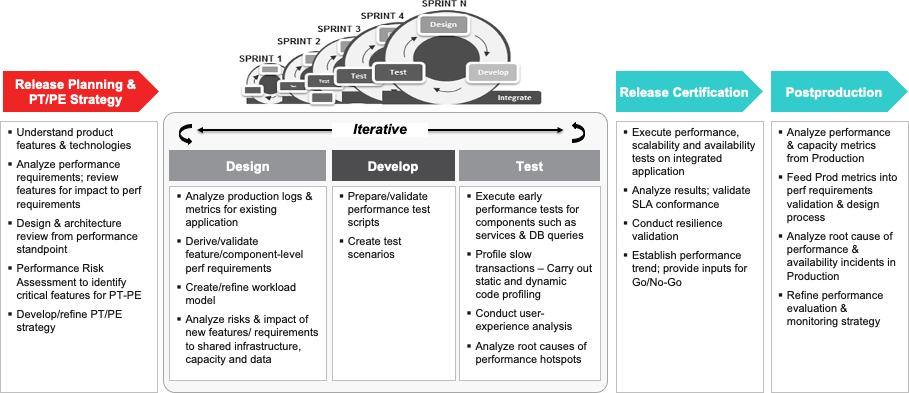

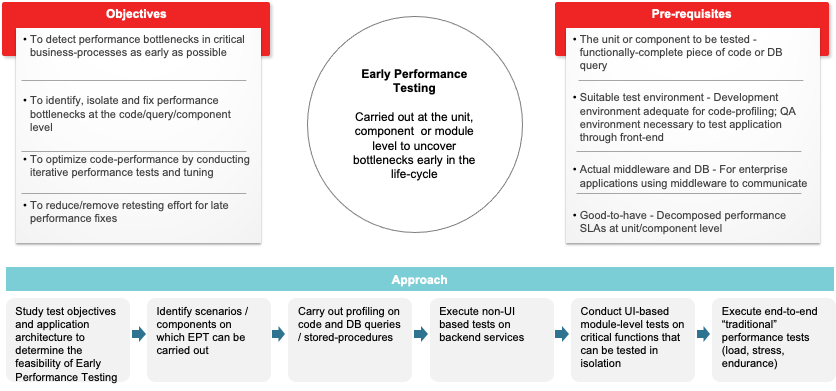

Approach to Early Performance Testing in Agile

The goal of Performance Testing in Agile is to “test performance early and often” in the development effort and to “test functionality and performance in the same sprint.” In an Agile project, “done” should include completing performance testing within a sprint.

Agile Performance Testing can be broken down into,

Testing at the code level

Response time testing for newly developed application features during the sprint

Performance regression testing for the system integrated with newly developed features.

As soon as the coding starts in an Agile project, performance testing at the coding level can be started in parallel.

Upon completion of functional testing of the newly developed feature, it should be performed or response time tested to understand how much time it’s taking to perform its functionality.

Once newly developed functions get integrated with the overall system, performance testing is conducted to ensure that the newly developed features do not introduce any performance bottlenecks in the overall system behavior.

Performance testing during the regression phase helps identify any configuration, hardware sizing, and infrastructure issues.

Performance Testing Approach

Performance Testing at the ART level

Performance Team executes the Performance Test on PRE-PROD and raises issues, fixable on Train 2 or further, according to PI Planning workshop (Priority defined by Business).

Tools

- BlazeMeter

Metrics

- Number of virtual users

- Number of requests

- Hits per second (throughput)

- Average response time

- Max of response time

- Errors number

System Metrics

- CPU utilization (%)

- Memory utilization (%)

- Network traffic

Business Metrics

- Number of transactions (if not matching with single requests)

- Transactions per time unit

Entry, Exit & Suspension Criteria

Entry Criteria

Performance Test plan is complete and approved by the project's management.

Correct version is installed in performance testing environment, i.e. the version previously functionally tested and fixed if needed

Test data is complete and in the performance testing environment in sufficient time to allow test scripts to be completed.

Test accounts have been created in the performance testing environment in sufficient time to allow test scripts to be completed.

Test scripts complete.

All assigned resources are available to monitor the test.

All parameter sets used in the script are generated based on the Database values.

Exit Criteria

All test scripts completed successfully.

No critical problems encountered.

All non-critical problems are logged.

All test logs are captured.

All post-test notifications sent.

All test results comply with the requirements.

Suspension Criteria

Critical problems are encountered and logged.

Hardware or environment errors prevent the completion of the test.

Absence/incorrect of test data.

Risks and Contingencies

A significant difference in configuration from the production environment.

Performance testing results can be essentially different even in case of minor difference in think times, arrival rate and test duration.

During the execution of the tests, some major performance or functional problems that may require code changes, creation of a new build may be discovered and, in that case, it may be necessary to repeat the load test from the beginning.

Load test should be performed against a build that is solid enough, and that has been functionally tested, after code is complete. Failure to follow this rule may result on rework to update test scripts for every new build, plus the load test may need to be repeated from the beginning. This will affect the schedule of releasing the product.

Performance testing tool is not capable of identically reproducing real life scenarios - so results could only be trusted as having limited reliability level.

Network/systems latency issues.

Environment’s unavailability.