Definition and Purpose

Static testing is a software testing technique comprising static analysis and reviews.

Static analysis refers to the review of the software source code without executing the code. This analysis is carried out by developers using tools such as SonarQube etc., to check the syntax of the code, coding standards, code optimization, etc. Developers perform this analysis at any point during the development or configuration phase in the project lifecycle.

Refer to the links below to learn more about static testing and analysis.

This process is specific to reviewing the project artifacts from a QE perspective, reviews of the testing artifacts and the role of Quality Engineers in these reviews.

Target Audience

QE Chapter Leader, Release Quality Manager, QE Specialist (Functional SME), Test Automation Engineer (TAE), Performance Test Engineer (PTE), Security Test Engineer (STE) and Accessibility Test Engineer (ATE).

Objectives

Reviews are performed by a team of testers, developers, and subject matter experts to analyze, find, and eliminate errors or ambiguities in documents such as requirements, design, test cases, etc. This process covers the reviews of the project artifacts, such as requirements/user stories, design, test cases etc., conducted by the QE team and the peer reviews or internal reviews of the testing deliverables conducted within the QE team.

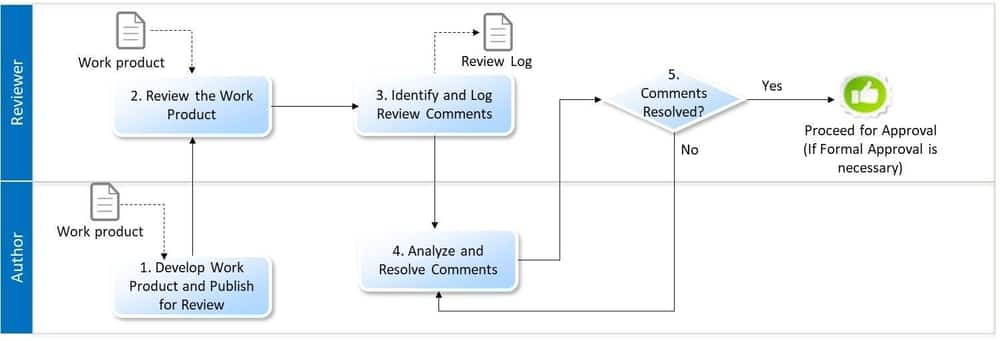

Process Flow

An illustrative view of the process flow typically followed for reviews is shown below.

List of Work products and the Recommended Review Type

To ensure a thorough review of work products, teams can use the following recommended review types. Depending on the project requirements and the nature of the work product, teams can choose to use one or more of these review types.

| Work Product | Review Criteria/Trigger | Location of Review Log | Offline Review | Group Review | Formal Walk-Through |

|---|---|---|---|---|---|

Requirements/User Stories |

|

Within the Requirements document/User Story/Sprint backlog

|

✔

|

|

Applicable only for Waterfall projects where the BA/Product owner conducts walk-through of the requirements to the project team

|

Design |

|

Within the document

|

✔

|

|

Applicable only for Waterfall projects where the Architect/Developer conducts walk-through of the design to the project team

|

Test Estimates (Waterfall Model) |

|

Within the document

|

✔

|

Applicable only when multiple applications, projects etc., are impacted

|

|

Test Strategy |

|

Within the document

|

✔

|

Applicable only when multiple applications, projects etc., are impacted

|

Applicable only for Waterfall projects where the QE Architect conducts walk-through of the test strategy to the project team

|

Test Scenarios and Test Cases |

|

Qtest/JIRA

|

✔

|

|

Applicable only in projects where the QE Architect conducts walk-through of the test cases to the BA/Product Owner

|

Automation Scripts |

|

UTAF

|

✔

|

|

|

Non-Functional Test (NFT) Scripts |

|

Within the Tool

|

✔

|

|

|

Test Execution Summary Report |

|

Within the document

|

✔

|

|

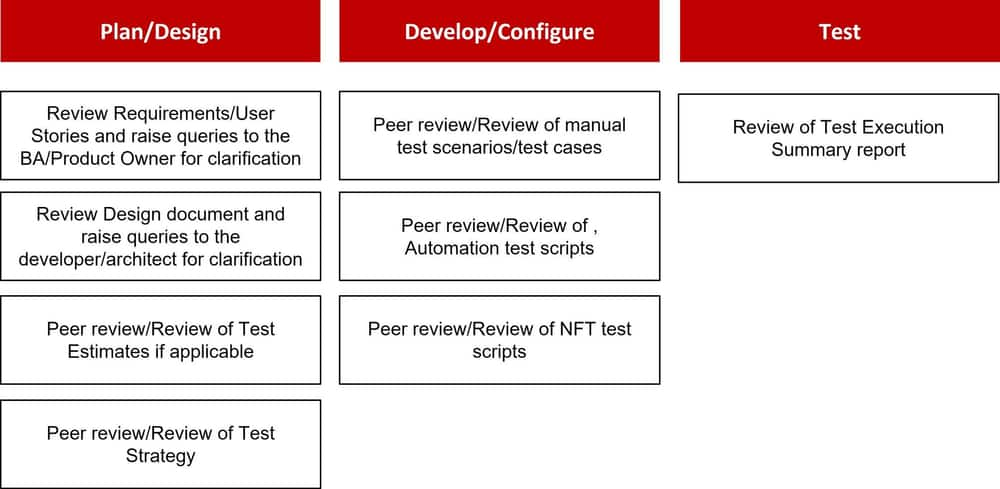

QE Reviews in Project Lifecycle

The diagram below captures the peer review/review activities performed by QE team in the project lifecycle.

Plan/Design Phase

The QE team reviews and understands the requirements/user stories and design documents to understand the testing scope and raise any queries to the business, product owner, business analyst, and developers for clarification.

These reviews focus on understanding if the requirements are testable, complete, consistent, unambiguous and have enough information to design test scenarios and cases.

QE team will conduct peer reviews of the test strategy in this phase and ensure the review comments are addressed in the document before it is published to project stakeholders for review and approval.

Develop/Configure Phase

QE team will perform a peer review of the test scenarios/cases to ensure the functionalities, application transition, test coverage, positive/negative testing etc. are adequately covered by the test cases. This process is carried out before the team shares the test scenarios/cases with Business Analyst for their review and approval.

A similar process is followed for peer review of Automation and NFT test scripts.

Test Phase

QE Chapter Leader/Release Quality Manager will review the test execution summary report and will provide testing sign-off.

Key Considerations for Reviewing the Work Products

Reviewers should consider the following key questions when reviewing or peer-reviewing the work products in the project lifecycle.

Requirements/User Stories

- Testability: Is the user story testable? Can it be effectively tested to ensure the desired functionality is implemented correctly?

- Acceptance Criteria: Are the acceptance criteria well-defined and specific? Do they provide clear guidance on how to validate the user story's functionality?

- Non-functional Requirements: Does the user story include any non-functional requirements, such as performance, security, or accessibility? Are these requirements clearly stated and testable?

- Test Data Requirements: Are there any specific test data requirements for validating the user story? Have these requirements been documented to ensure accurate and comprehensive testing?

- Dependencies: Are there any dependencies between the user story and other components or systems? Have these dependencies been identified and considered when planning the testing approach?

- Regression Testing Impact: Has the potential impact of the user story on existing functionality been assessed? Are there any regression testing considerations or test coverage adjustments needed?

- Edge Cases and Error Handling: Has the user story been reviewed for edge cases and error conditions? Are there specific scenarios or conditions that need to be tested to ensure robustness and proper error handling?

- Integration and Compatibility: Have the integration points and compatibility requirements been considered if the user story involves integration with other systems or components? Are there any specific integration tests or compatibility checks needed?

- Performance and Load Considerations: Does the user story have performance or load-related aspects? Have performance test scenarios or load testing requirements been identified and documented?

- Usability and User Experience: Have specific test scenarios or usability tests been defined if the user story impacts usability or user experience? Are there any accessibility requirements that need to be considered?

Design

- Clarity and Completeness: Is the Technical Design Document clear and comprehensive, providing a detailed description of the technical design? Are all components, modules, and interfaces clearly defined and explained to identify the testing scope?

- Consistency and Compliance: Does the Technical Design Document align with the project requirements and specifications?

- Testability and Maintainability: Does the Technical Design Document support testability by allowing easy identification and isolation of components for testing? Are the interfaces and dependencies well-defined, facilitating mocking or stubbing of external dependencies during testing?

- Performance and Scalability: Has the Technical Design Document considered performance and scalability requirements? Are there any potential bottlenecks or performance concerns identified in the design that needs to be addressed during performance testing?

- Error Handling and Exception Management: Does the Technical Design Document include robust error-handling mechanisms? Are error scenarios, exceptions, and edge cases considered for the QE team to develop negative test scenarios?

- Security and Data Protection: Has the Technical Design Document addressed security and data protection requirements to identify the security testing scope?

- Integration and Interoperability: Does the Technical Design Document address integration with external systems or third-party components? Are the interfaces and protocols well-defined for seamless interoperability?

- Usability and User Experience: Has the Technical Design Document considered usability and user experience aspects?

- Alignment with Test Strategy: Does the Technical Design Document align with the overall test strategy and testing approach? Are there any specific testing considerations or challenges identified in the design? Does the design facilitate automation testing, if applicable?

Test Estimates (Waterfall Model)

- Sizing: Are you utilizing a relative sizing approach, such as test case points, to estimate the testing effort for each requirement? Have you considered factors like complexity, risks, and the effort required for test case design, execution, and defect management?

- Test Effort Breakdown: Have you broken down the testing effort into specific tasks or activities, such as test planning, test case design, test execution, defect management, and regression testing? Did you estimate the effort for each task based on its complexity and expected duration?

- Test Case Design: Have you considered the time required to design test cases based on the requirements? Did you estimate the effort based on the number of test cases, complexity, and coverage required?

- Test Execution: Have you estimated the effort required for executing the test cases? Did you consider factors such as the number of test cases, execution time per test case, test data setup, and any additional setup or teardown activities?

- Defect Management: Have you estimated the effort needed for defect identification, logging, tracking, and retesting? Did you consider the expected defect rate, complexity of defects, and the time required for defect resolution?

- Test Data Management: Have you considered the effort required to manage test data, including data generation, extraction, masking, or data setup for specific test scenarios? Did you estimate the effort based on the complexity and volume of test data required?

- Test Environment Setup: Have you estimated the time needed to set up and configure the test environment? Did you consider factors such as server setup, software installations, network configurations, and any external dependencies?

- Test Automation: Have you evaluated the feasibility and effort required for test automation? Did you estimate the time needed for framework setup, script development, maintenance, and execution?

Test Strategy

- Scope: Does the Test strategy address the scope and objectives for which the testing is being done? Does the scope detail the requirements to be tested, types of test execution, interfaces to the external systems and functionality coverage?

- Test Approach: Does the test strategy list and describe all the types of testing, namely smoke, functional, SIT, regression, data testing, mobility testing etc., in detail?

- Automation: Does the strategy describe the automation approach to be adopted for the various types of testing – functional, smoke and regression etc.?

- Non-functional testing: Are the non-functional types of testing, such as performance, security etc., covered in detail in the strategy?

- Test Coverage: Does the test strategy address the test coverage details for each system/subsystem?

- Resources and Tool requirements: Have the resource requirements regarding the test environment, number of personnel and tools required been addressed as part of the Test strategy?

- Risks and Assumptions: Have assumptions, risks, or contingencies impacting the test been validated and documented?

- Test Data: Does the test strategy address the test data sources, creation methods, tools to generate test data and data dependencies for testing?

- Metrics: Are the metrics and measurement processes covered in the strategy?

- Quality Gates: Are the Entry and Exit criteria addressed for all types of test execution?

- Defect Management: Are the defect tracking and reporting methods been addressed as part of the test strategy?

- Suspension and Resumption: Are the suspension and resumption criteria documented as part of the test strategy?

Test Scenarios and Test Cases

- Functionality (Coverage)- Are all the testable requirements in Requirements/User Stories covered by the test cases?

- Flow of the script: Does the test case correctly flows to achieve the desired result in the best possible way?

- Multiple path testing: Have all the paths that the user may choose to achieve a particular result been covered in the test case?

- Naming Convention: Is there a proper naming convention to identify the functionality?

- Prerequisites: Have all the prerequisites related to functionality testing been mentioned?

- Test description: Is the test objective mentioned clearly in the test description?

- Quality of test Cases: Does the incomplete test case have an open clarification tagged to it? ( No Test Cases should be incomplete)

- Test Coverage: Are all the test cases linked to requirements/User Stories?

- Proper break up: Are there logical breakups in the test cases and steps written accordingly?

- Browser Test: Are test cases present to test the application in different browsers? (in the case of web applications)

- Application details: Are transitions between applications properly tested? (If more than one application is involved in a single scenario)

- Boundary value testing: Are there test cases present to check boundary values? (e.g., Marginal values and values within range and out of range of the specified in-scope data)

- Interface testing: Are there test cases to cover data Interfaces from Downstream & Upstream applications?

- Database/API testing: Are there test cases to cover database and API testing?

- Negative Test: Are suitable negative scenarios covered? Are there test cases for testing the error-handling capacity of the application/product?

- Mobile Testing: Are there test cases to cover the testing of the application using a combination of OS and mobile devices etc.? Are there test cases to cover mobile-specific validation, such as network availability, call handling, and memory availability?

Automation Scripts

- Test Case Coverage: Are the test automation scripts covering all the relevant test cases as defined in the test plan or test strategy? Ensure adequate coverage for functional, regression, and edge cases.

- Test Data Management: Are the test data requirements clearly defined and handled appropriately in the automation scripts? Verify that the scripts use the correct test data sets and handle data dependencies effectively.

- Test Script Readability: Are the automation scripts easy to read, understand, and maintain? Check for clear and concise coding practices, appropriate variable and function naming conventions, and code comments for better comprehension.

- Test Script Structure: Do the automation scripts follow a consistent and logical structure? Review the script organization, modularization, and adherence to coding standards or style guidelines.

- Test Script Reusability: Can the automation scripts be easily reused across different test scenarios or projects? Assess the potential for script reuse by identifying common functions, libraries, or modules that can be shared.

- Test Script Dependencies: Have the automation script dependencies, such as external libraries or frameworks, been documented and handled appropriately? Ensure that the necessary dependencies are resolved and configured correctly.

- Error Handling: Are there appropriate error-handling mechanisms within the automation scripts? Check for proper exception handling, error logging, and error reporting to improve script robustness and maintainability.

- Test Execution Flow: Does the execution flow of the automation scripts make logical sense? Review the sequence of steps and ensure that the flow aligns with the expected test scenario or use case.

- Assertions and Verifications: Are the assertions and verifications within the automation scripts accurate and relevant? Verify that the expected results are correctly compared against the actual results to determine the test pass/fail status.

- Test Reporting: Can comprehensive and meaningful test reports be generated? Ensure the automation scripts capture relevant test results, errors, and other relevant information for effective reporting and analysis.

- Maintenance and Updates: Assess the ease of maintenance and updates for the automation scripts. Consider factors like script version control, change management, and adaptability to evolving application changes.

Non-Functional Test Scripts

- Test Objective: Does the non-functional test script clearly define the objective of the test? Is it aligned with the evaluation's intended non-functional aspect, such as performance, security, scalability, or usability?

- Test Environment: Is the test environment properly set up to simulate real-world conditions for non-functional testing? Verify that the necessary infrastructure, configurations, and dependencies are in place.

- Test Data: Are the appropriate test data sets available for executing the non-functional test script? Ensure that the test data accurately represents the scenarios being tested and covers different use cases.

- Test Scenarios: Do the non-functional test scripts include specific scenarios or use cases to evaluate the desired non-functional aspect? Review the scenarios to ensure they cover relevant performance levels, security vulnerabilities, scalability limits, or usability conditions.

- Test Metrics: Are the metrics or criteria for measuring the non-functional aspect clearly defined within the test script? Verify that the script captures the required measurements, such as response times, throughput, error rates, or compliance with security standards.

- Test Tools: Have the appropriate tools or frameworks been selected and utilized within the non-functional test script? Check if the scripts leverage the right tools for performance testing, security scanning, or usability evaluations.

- Test Script Execution: Review the script's execution flow to ensure it aligns with the defined non-functional test scenarios. Verify that the steps and actions within the script accurately simulate the expected behavior or conditions.

- Test Parameters: Are the specific parameters to be measured or observed during the non-functional test identified within the script? Validate that the necessary measurements, logs, or observations are captured during test execution.

- Baseline Measurements: Does the non-functional test script establish baseline measurements for comparison? Ensure that there are reference measurements to evaluate the system's behavior and identify any deviations or regressions.

- Test Analysis and Reporting: Is there a process to analyze the non-functional test results and generate comprehensive reports? Verify that the script provides insights into the system's non-functional behavior and helps identify areas for improvement or optimization.

- Test Iteration: Consider whether multiple iterations or variations of the non-functional test script are required to evaluate the non-functional aspect comprehensively. Assess whether the script accounts for different load levels, security attack vectors, or usability scenarios.

- Test Maintenance: Review the non-functional test script's maintainability and update process. Ensure that procedures are in place to keep the script aligned with changes in the application or environment and to address any evolving non-functional requirements.

Test Execution Summary Report

- Test Coverage: Does the report provide information about the overall test coverage- passed, failed and no run, for the application?

- Execution Details: Are test case execution details – pass, fail and total defects provided for each requirement/user story?

- Defect Details: Are the defect details – resolved vs unresolved covered in the report?

- Automation: If automation is in scope, are the test execution results of the automated test cases captured in the report?

- NFT: If NFT is in scope, are the test execution results of the NFT test scripts captured in the report?

- Exit Criteria Check: Verify if the information has been provided to show that the exit criterion is satisfied for testing.

- Test Objectives: Ensure that the test objectives have met the acceptance criteria or not has been mentioned in the report.

- Known Issues: Verify if the details about the outstanding defects or known issues have been provided with acceptable workarounds. Ensure the functionalities that should have been tested but not tested are explicitly mentioned in the report.