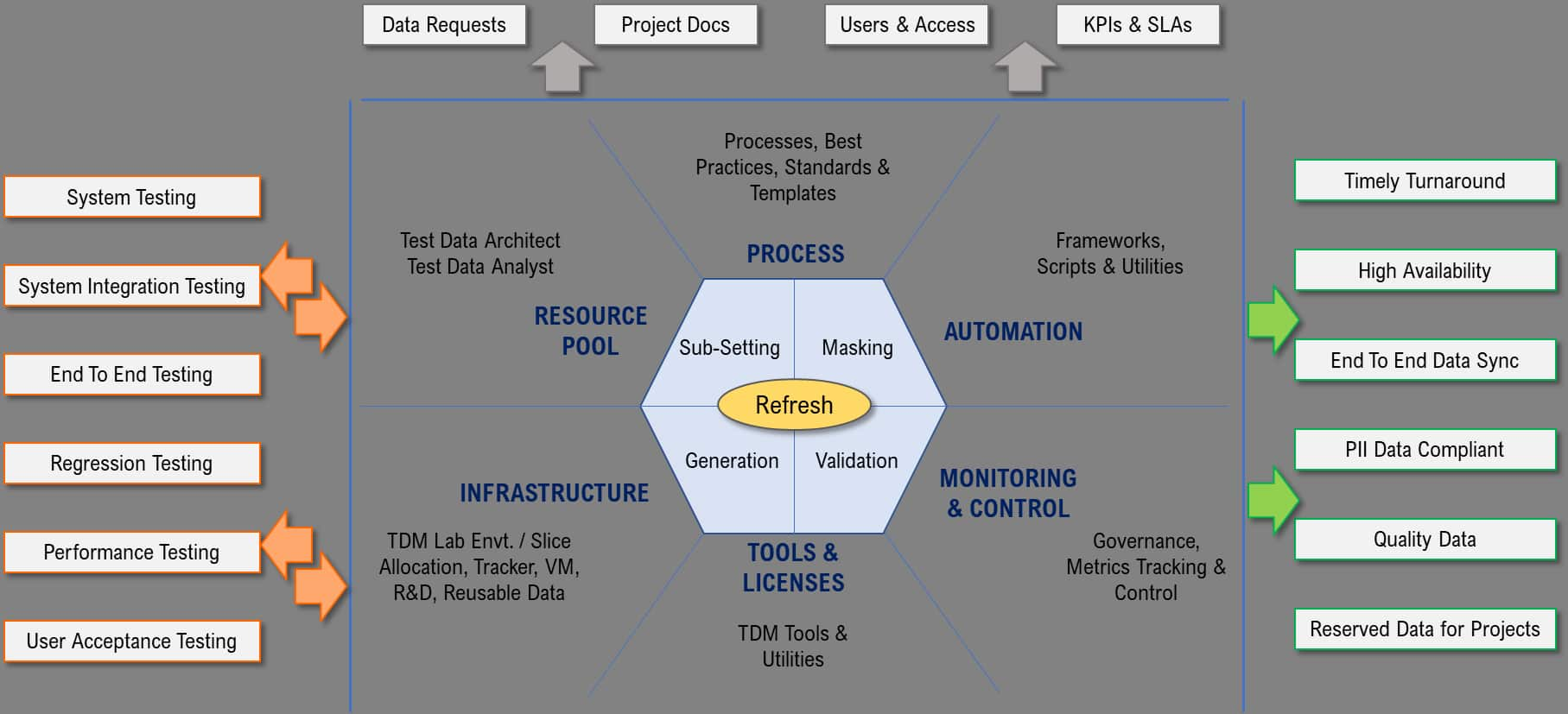

The objective of enterprise TDM Framework is to establish an Enterprise-wide Test Data Management framework that spans across tracks to ensure,

Personal Identifiable Information (PII) compliant data

Timely test data provisioning

Data synchronization across applications / interfacing upstream, and downstream systems

Improved test coverage, and

Increase test data management efficiency.

The test data provisioning is expected to facilitate QE in terms of performing System Testing, System Integration Testing, E2E Testing, Regression Testing, Performance Testing, Warranty Testing and UAT effectively, efficiently, smart, and strategically. The approach to cater to the Test Data Management needs is to set up an Enterprise level Test Data Management (TDM) team and centralize the data provisioning services with the objective of making TDM faster, cost-effective and better.

Resource Pool

The resource pool should be a part of the Product Engineering team. It should comprise a Test Data Architect and Test Data Analysts team. The Test Data Architect should act as a focal point for one or more portfolios depending on their demand/size. The Test Data Architect and Analysts should liaise with QE teams to ratify the test data requirements and ensure clarity. The Enterprise Test Data team should fulfil the data requests directly raised in the tool. The TDM team should also ensure Data Masking is performed for all applications where sensitive elements exist.

Process

The “Process” level should include the set of Test Data Processes, Standards, Practices and Guidelines. The key data processes are request-response process flow, End to End Data Refresh, and the data process integration points with Software Testing Life Cycle.

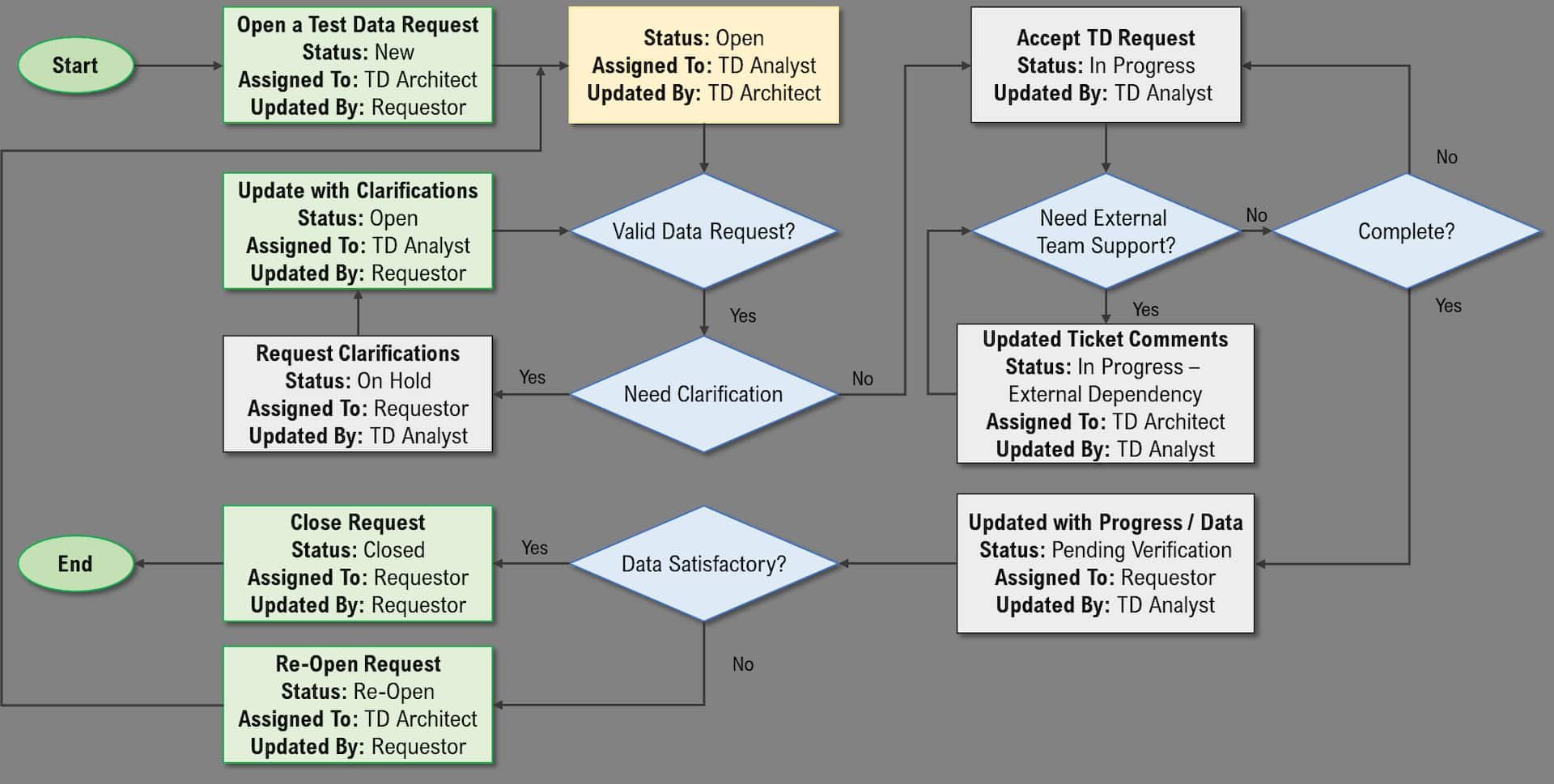

Test Data Request Life Cycle

If the requestor doesn’t close the request within 2 calendar days in the pending verification state, the request can automatically be closed.

Test Data Architect should track and escalate tickets as necessary and enforce the process with the QE and TDM teams.

The following diagram illustrates the Test Data Request Life Cycle from initiation to completion.

Detailed illustration of Data Request & Provisioning Approach:

TDM process is initiated in the Assessment stage itself through the Intake team. TD Analysts should analyze the data needs based on business requirements.

Requests should be reviewed by the TD Analyst for completeness of the information.

TD Analyst should revert to the owner of the request for any clarifications. If clarifications or updates are necessary, a review cycle can continue till the request is complete.

Once the request is reviewed and approved, data requests should be categorized as:

o Data to be pulled from Production

o Data to be created using available tools/scripts

TD Analyst should consolidate all such requests and group them as needed based on the trend and characteristics of data pulled from production on previous occasions.

TD Architect should work with Managers of the projects planned in each release to gather details about the environment and data requirements for the projects and allocate test data to different teams within the same environment based on the overall job execution schedule.

The TD Architect can request a test data refresh/restore, batch job execution and a system date change.

In case of new data refresh requests, the Environment management team pulls the data from production based on the data request or uses the latest master / golden copy of data pulled from production and makes the data available for the data masking team to scrub/mask sensitive data fields. Masked data should be deployed to the environment.

If it is a data refresh request over existing test data in the middle of release testing, the latest master or golden copy of data or backed-up data for data restore requests should be deployed to the test environment.

If it is a request for a system date change, the change is made as per the approved request.

If it is a special batch job execution request, the job is executed as per the approved request.

The TD Architect should allocate the test data to each team for new data setup requests & notify the teams about the environment and data availability.

Test data validation is performed by respective teams to ensure that the data has adequate breadth, depth and quality to prevent defect leakages & test errors due to data issues.

The QE team makes a decision on the completeness and correctness of test data. In case incorrect data is delivered test data request may be reopened by the requestor.

If the test data needs are unmet, respective test teams should discuss the same with the TD Architect and request additional test data if needed. The TDM team should review and provide the same.

If the data needs are satisfied, teams need to add the test data to their test cases.

For any specific data that is not available in production, a request can be raised to the tools and automation group to execute Scripts to generate data while working with the respective test teams.

The teams can utilize the tools and scripts to generate the requested data after the data refresh process for the respective environment is complete.

The QE team should execute the tests using the identified test data. If, at any point in time, data is not usable, they should search for similar data & provide feedback to the TDM team on the issues encountered.

QE teams should capture the data state before and after execution.

The TD Architect should build a Master Test Data Bed maintained over multiple releases while working with the respective teams.

Based on the availability of data in the environment and the nature of data requests, the TD Architect may plan the allocation of data to respective teams, and sometimes he/she may ask teams to share the data. Some data may be left out as a buffer for ad hoc requests from teams or new projects added to the release late in the cycle. If a project is dropped off from a release, the TD Architect can use his / her discretion to allocate the data to other teams requiring the data.

The TD Architect and environment team may use email distribution lists per environment to send in notifications on the availability of data and environment and coordination on special requests like batch job execution. The success of this process is dependent on the discipline of the respective teams in the usage of data allocated to them only and not to use or modify data that does not belong to them.

TDM Alignment to Test Life Cycle

The below tables describe the activities involved in each phase.

SDLC Phases Agile |

SDLC Phases Waterfall |

Activity | Steps |

|---|---|---|---|

| Continuous Exploration | Requirements | Test Design | Update Test Strategy to include test data approach Test Data Initial Estimation Update ALM tool with updated project / configuration |

| Continuous Exploration | Design | Test Data Creation |

Plan for test-data automation related activities Coordinate with Data masking teams for data generation.

|

| Continuous Exploration | Test | Test Data Maintenance |

|

| Continuous Deployment | Release | Test Closure | Update Test Summary reports |

Test Data Automation

The “automation” lever, in general, could include the data automation framework, scripts, and other utilities developed for a specific purpose. The Enterprise TDM team can develop scripts to facilitate the data slices to perform efficient subsets and enable build scheduled jobs to enable data provisioning. UI / Service layer automation / Utilities would be needed to be considered where synthetic data generation for improved test coverage and fit-for-use data.

Tools and Licenses

The Enterprise TDM should consolidate the related tools and licenses. The key objectives are to:

Centralize the administration of the Test data automation tools and licenses

Track the usage/utilization

Get hold of upgrades/patches

Envisage the training needs

Ensure the correctness of any tool-related contractual agreements

Coordination with the Tool vendors must be done as necessary. The Detailed TDM Strategy should detail on the tools/utilities that would be considered depending on the application where data provisioning needs to be performed.

Infrastructure

The fifth key lever is TDM Infrastructure. It is critical to forecast the Enterprise TDM Infrastructural requirements and set it up to operate in a smooth and seamless manner. Test environments are a prerequisite for data provisioning. The QE team should detail the test environment as part of the data request. Using that as a reference, data provisioning should be performed. In case of any environmental issues (Sizing / Performance / Storage), the TDM team and the QE team should coordinate with the environment team for faster resolution.

Monitoring and Control

The Metrics and Key Data Performance Indicators (KPIs) may be identified as a measure of success criteria. The following are some of the KPIs that may be set and diligently tracked periodically. At the end of the Pilot phase, the data benchmarks may be set beyond which the metrics can be tracked against it.

| No. | Key Data Performance Indicator | Description |

|---|---|---|

1 |

Average Data Provisioning Turnaround Time |

AVERAGE of the number of business days between request Status = Open and request Status = Pending Verification for all requests – grouped by request type |

2 |

Average Time in External Dependency |

Average number of business days spent in Status = External Dependency, grouped by TDM Request Type |

3 |

TDM Ageing |

Number of business days for requests staying open [open requests are requests in Status = Open, In Progress, External Dependency] – grouped by request type |

4 |

TDM Reopen Rate |

# of valid TDM requests reopened / Total # of valid TDM requests created |

TDM Portal

TDM Portal will provide enterprise-wide test data self-service capability. Following is a quick overview of TDM portal functionality.

Test Data Request Management |

|

Test Data Delivery |

|

Command Center |

|

TDM Self-Service Model

Below mentioned step-by-step process explains the Self-Service model:

| Activity | Details |

|---|---|

| Test data request management |

|

| Search for data |

|

| Reserve data |

|

The Test Data provider oversees data provisioning using synthetic data generation, data masking, and data subset methods. The Self-service tool may or may not have all the options like data generation, data masking, and data subset. However, the provider will connect to a specific tool and update the status of the test data request after data provisioning is completed.

Summarized activities to be followed:

The below activities should be followed as a part of the self-service activity

Raise test data request as requestor with data requirement

Provider to review the request and seek clarification if required

Provide to provision the data

Requestor to search and lock the data as per test needs

TDM Highlights for Matured Organization

Centralized TDM Service: Centralized TDM office should serve as a “One Stop Shop” for test data provisioning and recommending tools and technologies. It is recommended that this very important task not be added to the load of testers or the development team.

Holistic TDM Processes: Publish clearly defined data management workflows, policies and procedures for the creation, load, and protection of test data. Define metrics to measure data health in each environment and use reduced volumes of production data when a full load is not needed.

Effective Data Sub-Setting and Masking: Use data subsets that contribute to effective testing as it enables using real-life data sets (preserves referential integrity from the source) only on a smaller scale. Data masking is used to maintain data security by protecting sensitive attributes.

Gold Copy of Production Environment: Create and maintain a gold copy (repository) of reusable data. This single, enterprise-wide repository helps ensure that consistent, accurate test data is always available for testing purposes.

Maximized Automation: Prioritize automated TDM tools to reduce the manual process of creating test data and reduce the chance of errors. A variety of automated accelerators can help to improve turnaround time.