Objectives

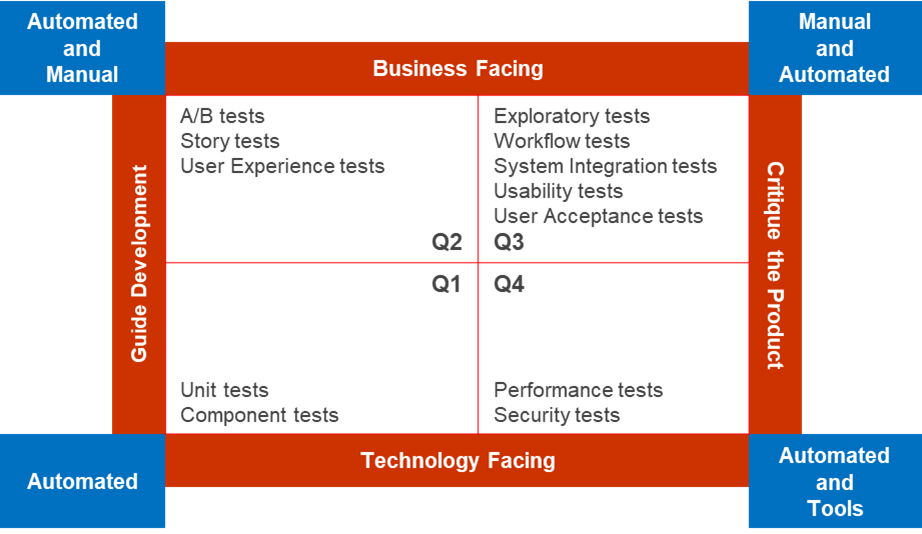

The objective of the functional testing is to validate the software’s functionality and ensure that it is working as per the requirements and acceptance criteria defined.

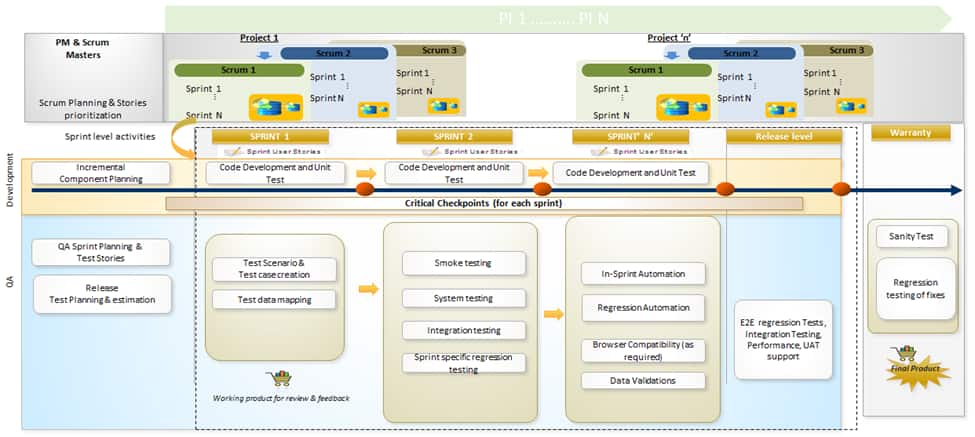

In Sprint (N, N+1..) Activities

| Backlog Grooming | Sprint Planning | Sprint Execution | Hardening |

|---|---|---|---|

|

|

|

|

Activities by Phase

| Role | Phase | Activity |

|---|---|---|

Product Owner |

Sprint 0 |

Update the product backlog and release plan |

ART Release Quality Manager (RQM) |

Sprint 0 |

|

Scrum Master

|

Release Planning (1- N) |

|

Sprint Execution (1-N) |

|

|

QA Specialist (Functional SME)/ Test Automation Engineer

|

Sprint 0 |

|

Sprint Execution (1-N) |

|

Final Sprint

All sev 1-3 defects are closed (Conditional) within the new features of Sprint N and N+1. Functional and Regression testing has been completed and passed.

Full regression of all streams and core regression is executed

One round of End to End testing is carried out

Implementation and Go Live – All sev 1-3 defects are closed as of the hardening phase. Functional, Regression and UAT testing have been completed and passed, and the results summary is reviewed before implementation.

Build deployments during the sprint.

For a regular sprint, planned deployment starts on the second day of the sprint. The succeeding builds are to be deployed twice a week.

An email notification will be sent for planned/ unplanned builds

Automated Smoke tests need to be triggered automatically once the build is deployed, irrespective of the environment, and the build will be accepted based on the smoke results

For applications, if the smoke test is not automated, manual smoke testing is performed, and results are shared along with release notes

The build labels from the Smoke results only to be deployed in the QE environment

Quality Engineers to perform functional testing in the sprint

Defects within each sprint should be resolved and tested within the sprint

Any defects that are from deployment notes need to be validated

Quality Engineers to perform sprint-specific regression and regression of past sprints in PI

Quality Engineers to share Test execution, defects metrics and daily status report daily with all stakeholders

Performance team to do performance testing for n-1 sprint in performance env.

SAST is to be run in Dev and QE environments

Test Execution Planning in Test Management tools (qTest)

Squad teams QE will leverage qTest as the primary Test Management tool and will manage the execution of test cases by setting conditions and scheduling the date for executing test cases. When a test case is executed manually, the tester executes the test steps defined and sets the Test Step to Pass or Fail depending on whether stated expected results are observed.

When an automated test script is executed

qTest shall utilize the automation tool to execute the automated test script - If the automated script fails, results and screenshots are captured for analysis and reporting

Failed automated scripts shall be executed manually to determine if the underlying cause is the application behaviour or the script. If the test case fails manually, a defect shall be logged and managed per the Defect Management Process.

Suppose the manual test execution is completed successfully. In that case, the automated script shall be examined for the root cause of failure, and suitable corrections shall be made to the automated test script.

Guidelines

| Guideline | Phase |

|---|---|

Estimation |

Sprint 0 |

Test Strategy |

Sprint 0 |

Test scenario and Test case preparation |

Sprint Execution (1-N) |

Test case design |

Sprint Execution (1-N) |

Defect Management |

Sprint Execution (1-N) |

Risk and Mitigation Plan |

Sprint 0 |

TDM data masking methodology |

Sprint Execution (1-N) |

TDM subset |

Sprint Execution (1-N) |

Test data generation |

Sprint Execution (1-N) |

Test data management |

Sprint Execution (1-N) |

Test data provisioning |

Sprint Execution (1-N) |