Overview

Data Testing is different from application testing because it requires a data-centric testing approach. Some of the Key elements in Data Testing are

Data testing involves comparing large volumes of data, typically millions of records.

Data that needs to be compared can be in heterogeneous data sources such as databases, flat files etc.

Data is often transformed, which might require complex SQL queries to compare the data.

Data Warehouse testing is very much dependent on the availability of test data with different test scenarios.

BI tools such as OBIEE, Cognos, Business Objects and Tableau generate reports on the fly based on a metadata model. Testing various combinations of attributes and measures can be a huge challenge.

The volume of the reports and the data can also make it very challenging to test these reports for regression, stress and functionality.

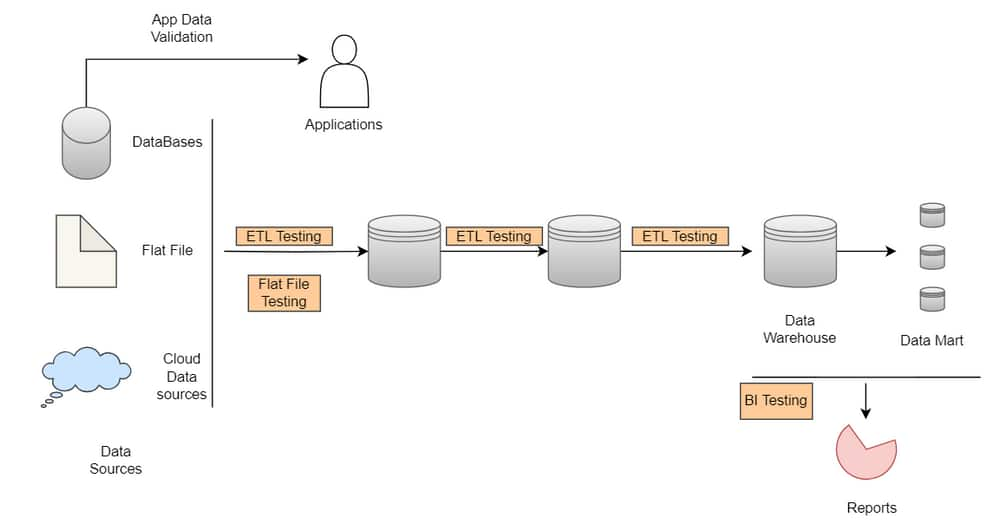

Data Testing Categories

ETL (Extract Transform Load) Testing

Flat File Testing

Business Intelligence (BI) Testing

Application Transactional Database Testing

ETL Regression testing

ETL Regression testing aims to verify that the ETL produces the same output for a given input before and after the change. Any differences need to be validated whether are expected as per the changes. Changes to Metadata Track changes to table metadata in the Source and Target environments. Often changes to source and target system metadata changes are not communicated to the QE and Development teams resulting in ETL and Application failures. This check is important from a regression testing standpoint.

End-to-End Integration Test

Once the data is transformed and loaded into the target by the ETL process, it is consumed by another application or process in the target system. The consuming application is a BI tool for data warehouse projects such as OBIEE, Business Objects, Cognos or SSRS. For a data migration project, data is extracted from a legacy application and loaded into a new application. In a data integration project, data is shared between two different applications, usually regularly. ETL integration testing aims to perform end-to-end testing of the data in the ETL process and the consuming application.

End-to-End Data Testing

Integration testing of the ETL process and the related applications involves the following steps:

Set up test data in the source system.

Execute the ETL process to load the test data into the target.

View or process the data in the target system.

Validate the data and application functionality that uses the data.

ETL Performance Testing

The performance of the ETL process is one of the key issues in any ETL project. Often development environments do not have enough source data for performance testing of the ETL process. This could be because the project has just started, the source system only has a small amount of test data, or production data has PII information, which cannot be loaded into the test database without scrubbing. The ETL process can behave differently with different volumes of data.

Flat File Testing

Data Transformation Testing

Data in the inbound Flat File is transformed by the consuming process and loaded into the target (table or file). It is important to test the transformed data. Two approaches for testing transformations are white box testing and black box testing.

Step 1

- Review the transformation design document.

Step 2

- Prepare test data in the flat file to reflect different transformation scenarios.

Step 3

- Apply transformations on the flat file data using SQL or a procedural language such as PL / SQL to reflect the ETL transformation logic.

Step 4

- Compare the results of the transformed data with the data in the target table or target flat file.

End-to-End Data Testing of Flat File Ingestion

Integration testing of the inbound flat file ingestion process and the related applications involves the following steps:

Step 1

- Estimate expected data volumes in each source flat file for the consuming process for the next 1-3 years.

Step 2

- Set up test data for performance testing either by generating sample flat files or getting sample flat files.

Step 3

- Execute the flat file ingestion process to load the test data into the target.

Step 4

- Executing the flat file ingestion process again with large data in the target tables to identify bottlenecks.

Business Intelligence (BI) Testing

When a new report or dashboard is developed for consumption by other users, performing a few checks to validate the data and design of the included reports is important.

BI Functional Testing

Report or Dashboard Design Check

Verify that the new report or dashboard conforms to the report requirement/design specifications. Some of the items to check are :

Verify that the report or dashboard page title corresponds to the content of the reports.

For reports with charts, the axis should be labelled appropriately.

The data aggregation level in the reports should be as per the report requirements.

Verify that the report or dashboard page design conforms to the design standards and best practices.

Validate the presence and functionality of the report download and print options.

Where applicable, verify that the report help text exists and is appropriate for the report content.

Verify the existence of any required static display text in the report

Prompts Check

Prompts are used to filter the data in the reports as needed. They can be of different types, but the most common type of prompt is a select list or dropdown with a list of values. Some of the key tests for prompts are :

Verify that all the prompts are available as per requirements. Also, check if the type of prompt matches the design specification.

For each prompt, verify the label and list of values displayed (where applicable).

Apply each prompt and verify that the data in the report is getting filtered appropriately.

Verify the default prompt selection satisfies the report or dashboard page design specification.

Report Data Accuracy Check

Verify that the data shown in the report is accurate. This check is a vital aspect of the report’s functional testing.

Cross-check the report with data shown in a transactional system application that is trusted by the users as the source of truth for the data shown in the report.

Come up with an equivalent database query on the target and source databases for the report. Compare the results from the queries with the data in the report.

Review the database query generated by the report for any issues.

Apply reports prompts and validate the database query generated by the report as well as the query output.

Drilldown Report Checks

It is common to have links to drill down reports in a report so the user can navigate those reports for further details. Links to these reports can be at the column or heading levels. For each link to the drill-down report, verify the following items :

Verify that the counts are matching between the summary and detail report where appropriate.

Verify that all the summary report prompts are applied to the detail report.

Check if the links to the detail report from the summary report are working from charts, tables, and table headings.

Verify the database SQL query for the drill-down report is as expected.

Report Performance Checks

Verify that the reports and dashboard page rendering times are meeting SLA requirements. Test the performance for different prompt selections. Perform the same checks for the drill-down reports.

Browser Checks

Browser compatibility of the reports is often dictated by the support of these browsers by the BI tool being used for the BI project. Any custom Java script additions to the report or dashboard page can also result in browser-specific report issues.

BI Security Testing

Like any other web application, BI applications also have authentication and authorization security requirements. BI applications are often integrated with single sign-on or embedded with other transactional applications. It is important to test the security aspects of the BI application just like other web applications.

Report Access Security

This test aims to validate that the BI user's access to the BI reports, subject areas and dashboards is limited according to their access levels. Role-based security in the BI tool generally controls access to the reports.

Understand how access to reports is different for different roles.

Identify users with those roles for testing.

Login as these users and validate access to the reports and subject areas.

Data Security

In this form of security, different users have access to a report, but the data shown in the report is different based on the person running the report.

Single Sign-on Security

Single sign-on is often used as the authentication mechanism for BI applications in large enterprises. This testing aims to ensure that users can access BI applications using their single sign-on access (or Windows authentication).

Integrated Security

BI Applications are sometimes embedded as part of other transaction system applications using a common authentication mechanism. This integration needs to be tested for different users.

BI Regression Testing

BI tools make it easy to create new reports by automatically generating the database query dynamically based on a predefined BI Model. This presents a challenge from a regression testing standpoint because any change to the BI Model can potentially impact existing reports. Hence it is important to do a complete regression test of the existing reports and dashboards whenever there is an upgrade or change in the BI Model.

BI Stress Testing

BI Stress testing is similar to testing of any web-based application testing. The objective is to simulate concurrent users accessing reports with different prompts and understand the bottlenecks in the system.

Simulating User Behavior

A typical BI user will log into the system, navigate to reports (or dashboards), apply prompts, and drill down to other reports. After the report is rendered, the BI User reviews the data for a certain time called think time. The BI user eventually logs out of the system. The first step in conducting a stress test is to identify a list of the most commonly used reports or dashboards for the testing.

Simulating Concurrent User Load

Conducting a stress test requires concurrently simulating the above BI user behaviour for different user loads. So it is important to have a list of different user logins for the stress test. When executing the reports, each user can pick a different set of prompt values for the same report. The stress test should be able to randomize each user's prompt selection to generate a more realistic behaviour.

BI Cache

Most of the BI tools support a caching mechanism to improve the performance of the reports. Database queries and the data blocks used to generate the results are also cached in databases. The question that frequently comes up is whether the cache should be turned off for stress testing. While turning off caching is a good way to understand system bottlenecks, we recommend performing the stress test with the caching turned on because it is closer to what a production system would look like. The following points should be kept in mind to reduce the impact of caching :

Include a large set of reports and dashboard pages.

Randomize prompt values for a given report or dashboard page to request the same report with different filter conditions.

User a large pool of user login so that different user-based security is applied during the execution.

Stress testing is an iterative process. When the first round of stress tests is conducted, a particular component of the system (e.g. database cache) might max out and bring down the response times. Once this bottleneck has been addressed, another round of stress tests might find another bottleneck. This process must continue until the target concurrent user load and report performance SLAs are met.

Performance Measurements

There are several performance data points that need to be captured while running a stress test:

Initial and final response time for each of the report and dashboard pages.

Time spent for each request in the database and in the BI tool.

CPU and memory utilization in the server machines (BI Tool and database).

Load balancing across all the nodes in a cluster.

The number of active sessions and the number of report requests.

Busy connection count in database Connection pool and current queued requests.

Network load.

Database cache and disk reads.